Gordon E. Moore co-founded Intel with Robert Noyce on July 18, 1968, in Mountain View, California. 3 years prior in 1965, he had theorized that the number of transistors on a microchip would double roughly every 2 years, with the cost of computers being halved in the same time frame, as well as exponential growth. This theory is called Moore's law. In the advent of affordable computing for consumers during the late 1970s and early '80s, this law was proving to be valid. With technology advancing at a rapid pace, faster and faster hardware was being produced each coming year, greatly reducing the costs of older hardware.

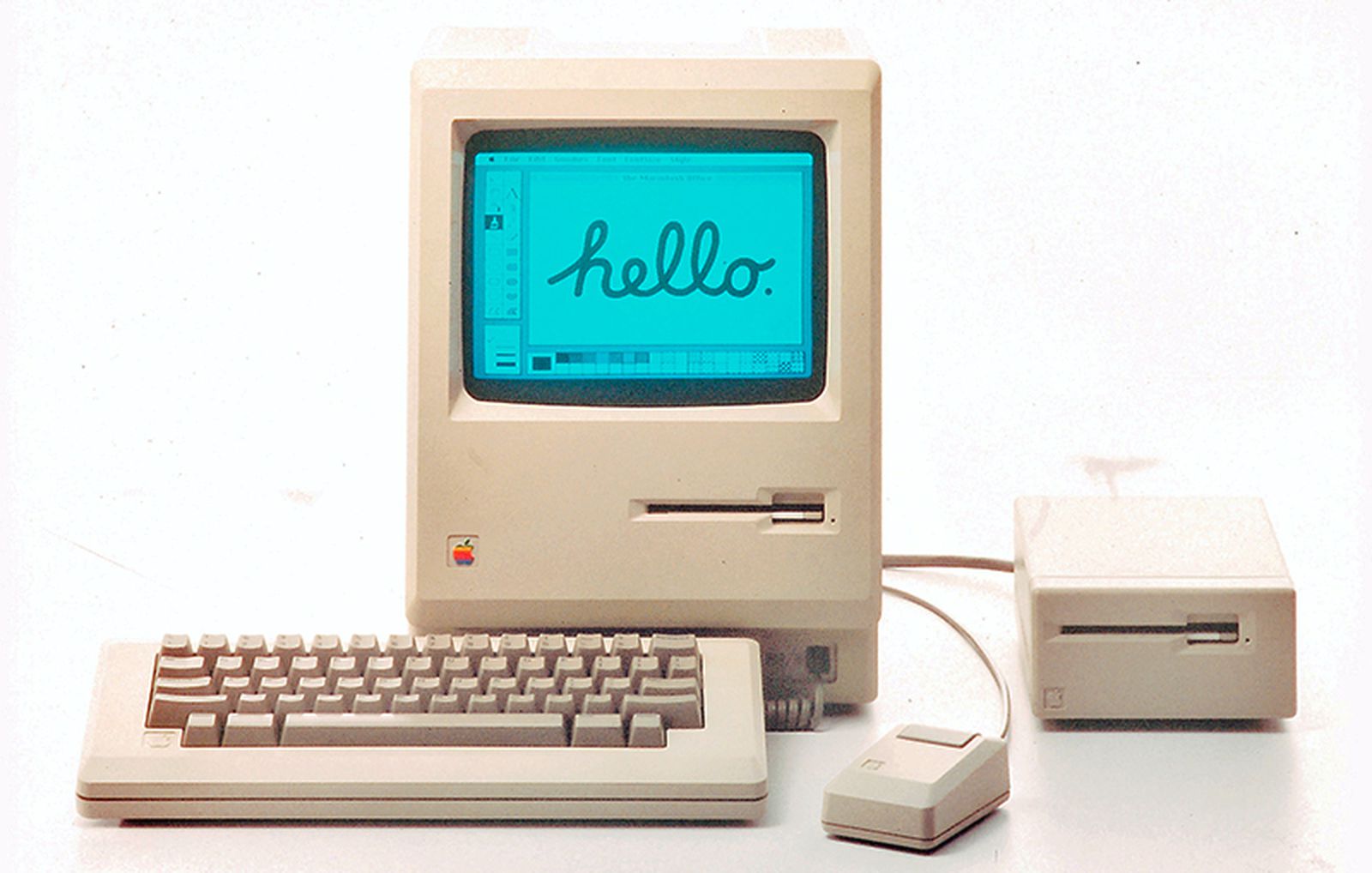

Take for example the Commodore 64, one of the most popular home computers during the 1980s. Released for 595 dollars (~1,575 dollars in 2020) in August of 1982, it featured a 1.023 MHz processor and 64KB of ram. Two years later, the revolutionary Apple Macintosh was released, for 2,495 dollars (~6210 dollars in 2020), which featured an 8MHz processor and 128KB of ram standard. It also was the first relatively accessible computer with a graphical user interface. With two years of innovation, huge improvements were made, such as a mouse, GUI, and substantial improvements to overall computational power. With computers progressing so rapidly, many consumers bought in knowing that obsolescence would follow suit only a few years later.

|

| 1984 Apple Macintosh |

Moore's law continued through the 1990s, but ultimately slowed down during the later 2000s and beyond. You can still use a computer from over ten years ago today, even for most essential tasks. Several decades ago this simply wasn't the case. Simply put, many believe that Moore's law is dead. Even the likes of it's visionary, Gordon E. Moore himself, stated in April 2005 that the projection cannot be sustained indefinitely. The rate of obsolescence and progression overall has diminished greatly. It seems that the future of progression in computing will no longer rely on sheer increases to the number of transistors or raw speed.

Sources:

https://ourworldindata.org/technological-progress

https://www.investopedia.com/terms/m/mooreslaw.asp

https://www.intel.com/content/www/us/en/silicon-innovations/moores-law-technology.html

https://history-computer.com/ModernComputer/Personal/Commodore.html

https://en.wikipedia.org/wiki/Moore%27s_law

This comment has been removed by the author.

ReplyDeleteThis was a very interesting post, as Bill Shao and I actually just recently had a conversation about this law. Just to add on, Moore's Law in its early years and even now, has been more than just an observation or a prediction; instead, it is also the compulsive goal that companies such as Intel have been working towards. In other words, since the publication of Moore's Law (and its revision from the original 10-year period to 2 years), computer companies around the world have used it to gauge the progress they ought to make in the following years. However, as you said, Moore's law is indeed beginning to show signs of irrelevance in recent years, as transistors have gotten so small that simple physics will soon prevent it from continuing to decrease in size and cost at the same time. Suggestions, such as changing material or going quantum, have been thrown out but none successfully implemented.

ReplyDeleteSources:

https://www.theverge.com/2018/7/19/17590242/intel-50th-anniversary-moores-law-history-chips-processors-future